基于cs20si的Tensorflow笔记,课程主页;

本节主要内容:Graphs and Sessions

Tensor

An n-dimensional array

- 0-d tensor: scalar (number),标量

- 1-d tensor: vector

- 2-d tensor: matrix

- and so on

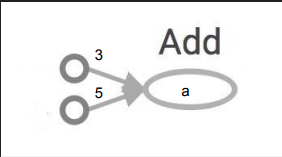

Data Flow Graphs

- Nodes: operators, variables, and constants

- Edges: tensors

1

2

3import tensorflow as tf

a = tf.add(3, 5)

print(a) # Tensor("Add:0", shape=(), dtype=int32)

TF automatically names the nodes when you don’t explicitly name them:x = 3 , y = 5

计算图

Create a session, assign it to variable sess so we can call it later.Within the session, evaluate the graph to fetch the value of a.1

2

3

4

5

6import tensorflow as tf

a = tf.add(3, 5)

sess = tf.Session()

print(sess.run(a))

sess.close()

>> 8

一种更加高效的写法:1

2

3

4

5import tensorflow as tf

a = tf.add(3, 5)

with tf.Session() as sess:

print(sess.run(a))

>> 8

tf.Session() : A Session object encapsulates(包含) the environment in which Operation objects are executed, and Tensor objects are evaluated.

More graphs

1

2

3

4

5

6

7

8

9

10import tensorflow as tf

x = 1

y = 2

op1 = tf.add(x, y)

op2 = tf.multiply(x, y) # mul() has been deprecated

op3 = tf.pow(op2, op1) # op2^op1

with tf.Session() as sess:

op3 = sess.run(op3)

print(op3) # 8

_note:tf.mul, tf.sub and tf.neg are deprecated in favor of tf.multiply, tf.subtract and tf.negative_

1

2

3

4

5

6

7

8

9# 省略 import tensorflow as tf,下同

x = 2

y = 3

add_op = tf.add(x, y)

mul_op = tf.multiply(x, y)

useless = tf.multiply(x, add_op)

pow_op = tf.pow(add_op, mul_op)

with tf.Session() as sess:

z = sess.run(pow_op)

_note:Because we only want the value of pow_op and pow_op doesn’t

depend on useless, session won’t compute value of useless

→ save computation_

1

2

3

4

5

6

7

8

9x = 1

y = 2

add_op = tf.add(x, y)

mul_op = tf.multiply(x, y)

useless = tf.multiply(x, add_op)

pow_op = tf.pow(add_op, mul_op)

with tf.Session() as sess:

z, not_useless = sess.run([pow_op, useless])

print(z, not_useless) # (9, 3)

_tf.Session.run(fetches, feed_dict=None,

options=None, run_metadata=None) :

pass all variables whose values you want to a list in fetches_

Distributed Computation

1

2

3

4

5

6

7

8# To put part of a graph on a specific CPU or GPU:

with tf.device('/gpu:2'):

a = tf.constant([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]], name='a')

b = tf.constant([[1.0, 2.0], [3.0, 4.0], [5.0, 6.0]], name='b')

c = tf.matmul(a, b)

sess = tf.Session(config=tf.ConfigProto(log_device_placement=True))

print(sess.run(c))

不要构建多个图: The session runs the default graph

- Multiple graphs require multiple sessions, each will try to use all available resources by default

- Can’t pass data between them without passing them through

python/numpy, which doesn’t work in distributed - It’s better to have disconnected subgraphs within one graph

Why Graphs

1.Save computation (only run subgraphs that lead

to the values you want to fetch)

2.Break computation into small, differential pieces

to facilitates auto-differentiation(自动微分)

3.Facilitate distributed computation, spread the

work across multiple CPUs, GPUs, or devices

4.Many common machine learning models are commonly taught and visualized as directed graphs already